Qlik Data Integration (QDI)

Qlik Data Integration provides all the tools you need to set up a real-time data pipeline, including data ingestion and replication, and data warehouse and data lake automation. QDI also includes a data catalog which enables you to govern and package data ready for consumption in the analytics platform of your choice. With an agnostic approach, you can use QDI seamlessly with both your internal databases and all the major cloud vendors.

Data Integration Use Cases

QDI can help address these common use cases:

- Query Offloading: Moving data from mainframes to other less costly databases for reporting purposes (typical in the financial services industry)

- Data Warehouse/Data Lake automation: Moving data from a source system to a relational database to enable an analytics ready data warehouse or data lake

- Data streaming: Real-time streaming of data from a source to a target (typically involves solutions like Kafka).

What is Data Integration?

Data integration is the process of combining your data from different sources to give you a unified view. You can then use this data in cloud applications, data analytics projects and applications. To ensure you have a continuous flow of up-to-date data, you need a robust data pipeline.

“To lead in the digital age, everyone in your business needs easy access to the latest and most accurate data. Qlik enables a DataOps approach, vastly accelerating the discovery and availability of real-time, analytics-ready data to the cloud of your choice by automating data streaming (CDC), refinement, cataloging, and publishing” – Qlik

Qlik Data Integration (QDI) gives you instant access to the same data you have in your internal systems and makes it available for analytics and web-apps without the need for advanced development and coding.

For example:

- Real-time, log-based CDC data allows analysis based on the latest transactional updates in the source relational database system

- Online shops have instant access to stock levels and warehouse status.

What is Change Data Capture?

Change data capture (CDC) refers to the process or technology that identifies and captures changes made to a database. Qlik Replicate reads the database logs, constantly capturing all changes without the need of any installation on the source systems database server. This unique method enables real time replication without affecting the performance of the source database.

CDC eliminates the need for bulk load updating and inconvenient batch windows by enabling incremental loading or real-time streaming of data changes into your data warehouse. It can also be used for populating real-time business intelligence dashboards, synchronising data across geographically distributed systems, and facilitating zero-downtime database migrations.

The Qlik Data Integration Platform – From data sourcing to cataloging in one platform

Data Integration begins with the ingestion process and includes steps such as ETL mapping and transformation. QDI includes all these processes within one platform that works seamlessly across on-premises and cloud environments.

- Qlik Replicate: Universal data replication and data ingestion

- Qlik Compose: Accelerates creation of analytics-ready data structures

- Qlik Data Catalog: Modern data management and cataloging.

Qlik Replicate

Qlik Replicate (former Attunity Replicate) offers optimised data ingestion from a broad range of data sources and platforms and seamless integration with all major big data analytics platforms. Replicate supports bulk replication as well as real-time incremental replication using Change Data Capture. The unique zero-footprint architecture eliminates unnecessary overhead on your mission-critical systems and facilitates zero-downtime data migrations and database upgrades.

Qlik Replicate supports multiple sources and platforms to enable you to synchronise your data across on-prem and cloud environments. Those sources span from data warehouses to legacy systems, including platforms such as Oracle, SQL, Teradata, IBM Netezza, Exadata, AWS, Azure, Google Cloud, Cloudera, Confluent, SAP, and IMS/DB, DB2 z/OS and RMS.

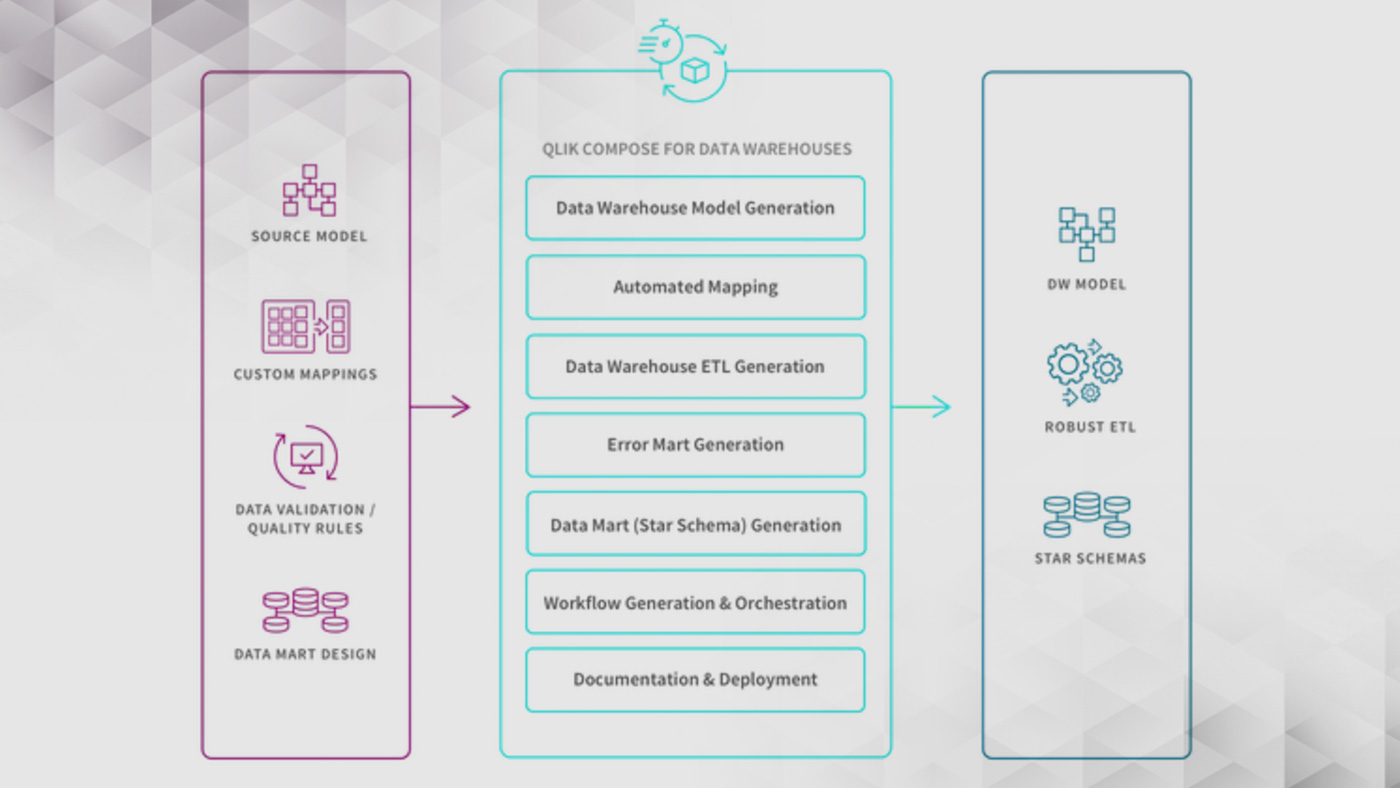

Qlik Compose

Qlik Compose automates and optimises the creation and operation of data lakes and data warehouses. You can design your data warehouse or data lake and all transformations in an easy-to-use interface from source to data lake/warehouse and data marts without dependency on developers – all whilst leveraging best practices and proven design patterns.

It dramatically reduces the time, cost, and risk of data lake/warehouse projects, whether on-premises or in the cloud. Together with Qlik Replicate you can update your data lake/warehouse constantly during the day without having to wait for large batch jobs during the night.

Qlik Compose is solution agnostic and works on a range of on-prem databases such as SQL Server, Oracle, DB2, SAP Hana and cloud platforms including Azure, Amazon, Google Big Query, Snowflake, Databricks, and data lakes, for example Cloudera or Hortonworks.

Qlik Catalog

It is essential in self-service analytics, that users not only have access to the available data, but can also find data and derive data lineage (the data origin, what happens to it and where it moves over time) and view the data quality.

Within Qlik Catalog, you can search for data sources, tables, fields, and descriptions with a shopping like experience based on access rules. It shows the data lineage, profiling, popularity, consistency, and quality as well as a sample of what’s available. You can select the desired data, instantly open it, and gain insights in your BI Tool of choice: Qlik, Tableau, or Power BI.

Ready to get started with Qlik Data Integration?

Get in touch and we can work through your options!

James Sharp

Managing Director

james.sharp@climberbi.co.uk

+44 203 858 0668

Tom Cotterill

Senior BI Consultant

tom.cotterill@climberbi.co.uk

+44 203 858 0668